Nano Banana 2's reasoning-guided architecture enables perfect text rendering, complex scene composition, and visual reasoning capabilities that traditional diffusion models can't match. Available now on fal with simple API integration.

Nano Banana 2

The infrastructure breakthrough that makes enterprise-grade image generation with perfect typography and visual reasoning accessible at scale

Nano Banana 2 has launched, bringing revolutionary reasoning-guided image synthesis that developers can integrate today. This comprehensive guide covers everything you need to know about Nano Banana 2's API, from its groundbreaking Plan-Evaluate-Improve architecture to practical implementation strategies for perfect text rendering and visual reasoning.

Whether you're building UI mockup generators, educational content tools, or marketing automation platforms, Nano Banana 2's reasoning layer solves the problems that have plagued AI image generation: garbled text, spatial inconsistencies, and inability to handle technical content. With native 4K generation across 10 aspect ratios, it's production-ready for enterprise workflows.

Nano Banana 1 vs. 2: The Technical Evolution

What's the difference between reasoning-guided and traditional diffusion models?

The leap from Nano Banana 1 is a fundamental architectural shift from pattern matching to logical reasoning in image generation.

| Feature | Nano Banana 1 | Nano Banana 2 |

|---|---|---|

| Architecture | Pure diffusion model | Reasoning-guided (Gemini 3.0 + GemPix 2) |

| Text Rendering | Pattern-based (often incorrect) | Validated typography |

| Resolution Options | Fixed output | Native 1K/2K/4K |

| Aspect Ratios | Limited options | 10 native ratios |

| Prompt Processing | Keyword matching | Logical validation |

| Visual Reasoning | None | Equations, diagrams, structural accuracy |

| Generation | Single-pass diffusion | Plan → Evaluate → Improve |

When you prompt Nano Banana 1 for "a coffee shop with an 'OPEN' sign," you get a diffusion model's best guess, often resulting in garbled text. The model learned patterns of what signs look like but has no concept of what "OPEN" actually means.

Nano Banana 2 takes a fundamentally different approach. It's built on Gemini 3.0 Pro's "brain" for reasoning combined with GemPix 2's "hand" for execution, connected by a shared latent intent vector that fuses text reasoning with pixel generation. The reasoning layer understands that "OPEN" is a specific four-letter word that must be rendered accurately, validates the output, and iterates until correct.

This architectural difference extends beyond text. The reasoning layer validates spatial relationships, mathematical accuracy, and compositional logic. Traditional diffusion models lack the framework to validate outputs against logical requirements.

Exclusive Nano Banana 2 Capabilities

Perfect Text Rendering

The reasoning layer validates text character-by-character, transforming what was previously AI image generation's biggest limitation into a reliable capability.

The validation loop ensures consistency across variations. Generate four versions of a product mockup and all four will spell "PREMIUM" correctly, maintaining brand integrity across iterations.

Best practices for text accuracy:

- Put text in quotes: "sign reading 'SALE'"

- Specify positioning: "centered," "above door," "in corner"

- Limit to 3-5 text elements per image

- Use standard typography for best results

Visual Reasoning

How do I create accurate diagrams and equations with generative AI?

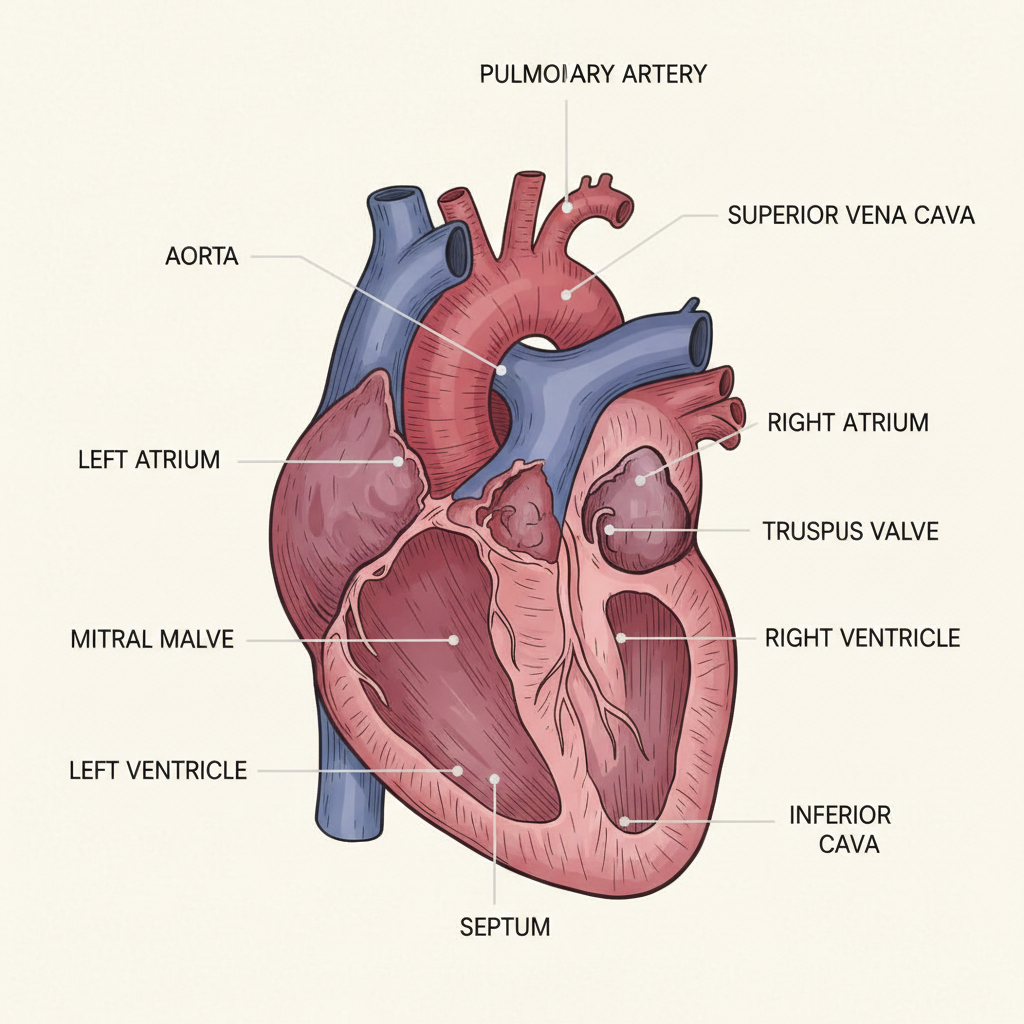

Beyond text rendering, the reasoning layer enables structural accuracy in technical diagrams and educational content. Where traditional diffusion models might generate something that looks diagram-like, Nano Banana 2 validates the logical relationships between labels, anatomical features, and connecting lines.

The validation loop ensures that anatomical terms point to the correct structures, that flow arrows indicate logical sequences, and that technical specifications maintain accuracy. This level of reasoning about visual information structure was previously impossible with pure pattern-matching approaches.

Visual Reasoning: Nano Banana 1 vs. Nano Banana 2

Prompt: "Human heart cross-section anatomical diagram with detailed labels: 'SUPERIOR VENA CAVA' at top, 'RIGHT ATRIUM', 'TRICUSPID VALVE', 'RIGHT VENTRICLE', 'INFERIOR VENA CAVA', 'AORTA', 'PULMONARY ARTERY', 'LEFT ATRIUM', 'MITRAL VALVE', 'LEFT VENTRICLE', 'SEPTUM' in center, lines pointing from each label to correct anatomical feature, medical textbook illustration style, cross-sectional view showing internal chambers, red and blue color coding for oxygenated and deoxygenated blood"

Left: Nano Banana 1 struggling with label placement precision and maintaining the complex spatial relationships required for educational accuracy. Right: Nano Banana 2 correctly positioning all anatomical labels with accurate terminology and proper leader lines pointing to correct structures.

Use cases:

- Medical and anatomical diagrams with precise labeling

- Mathematical equations with correct notation

- Technical flowcharts with accurate structure

- Educational diagrams preserving data relationships

- Scientific visualizations maintaining accuracy

Example prompts:

Whiteboard: quadratic equation

Equation: ax² + bx + c = 0

Solution: x = (-b ± √(b²-4ac)) / 2a

Clean handwriting, blue marker

Business flowchart:

Box 1: 'Receive Order'

Arrow to Box 2: 'Process Payment'

Arrow to Box 3: 'Ship Product'

Professional diagram style

Circuit schematic:

Battery labeled '9V'

Connected to resistor '100Ω'

Connected to LED

Wire completing circuit

Current flow arrows

Complex Spatial Composition

Complex prompts requiring specific spatial relationships often collapse in traditional diffusion models. Where Nano Banana 1 might generate the individual elements but lose track of their precise arrangement, Nano Banana 2's reasoning architecture validates spatial relationships before final render.

This extends to challenging scenarios like mirror reflections, where the model must understand which elements appear in the reflection versus the actual scene, a logical distinction that requires reasoning beyond pattern matching.

Traditional models struggle with these scenarios because they treat all visual elements as equivalent patterns. Nano Banana 2's validation loop confirms: Is the poster text showing in the mirror as it should? Is the towel text on the actual towel, not in the reflection? Are the spatial relationships logically consistent?

Spatial Composition: Nano Banana 1 vs. Nano Banana 2

Prompt: "Bathroom mirror shot showing reflection of a motivational poster on the wall behind the viewer, poster text reading 'NEVER GIVE UP' visible in the mirror reflection, toothbrush holder on sink counter with label 'FRESH' visible in actual scene (not reflection), towel with embroidered text 'HOTEL LUXE' hanging on right side of actual wall"

Left: Nano Banana 1 treating all text elements equivalently without understanding the spatial logic of reflections. Right: Nano Banana 2 correctly understanding mirror reflection logic—poster text appears reversed in the mirror while actual scene elements like the towel and toothbrush holder appear normally.

Aspect Ratio Intelligence

Nano Banana 2 composes natively for each aspect ratio. The model understands how visual weight and focal points shift across different aspect ratios.

A 9:16 vertical isn't a cropped 16:9. It's composed with vertical mobile viewing in mind. A 21:9 ultrawide creates true panoramic scope, not stretched standard dimensions.

Understanding the Reasoning Architecture

Nano Banana 2's design coordinates two specialized systems: a Gemini 3.0 "brain" that plans and validates, and a GemPix 2 "hand" that executes synthesis. This separation of concerns enables breakthrough capabilities.

The Three-Stage Loop

Plan: The Gemini 3.0 reasoning layer analyzes your prompt and creates a structured generation plan, identifying required text elements, spatial relationships, visual hierarchy, and technical constraints.

Evaluate: After GemPix 2 generates the initial image, the reasoning layer validates against the plan: Does the text match exactly? Are spatial relationships correct? Does the composition make logical sense?

Improve: If validation fails, the reasoning layer provides specific feedback for regeneration: "The text reads 'Logn' instead of 'Login', regenerate with correct spelling." This continues until validation passes.

This architecture is why Nano Banana 2 excels at tasks that confound traditional models. The multi-stage "Plan → Evaluate → Improve" loop similar to chain-of-thought reasoning ensures logical consistency that pure pattern matching cannot achieve.

For developers, this means prompt differently. You're giving instructions to a reasoning system, not just describing visual patterns. Explicit requirements ("text must read exactly 'SALE'") work better than implicit suggestions ("sale signage"). And you get valid outputs or clear failures, not subtly wrong results.

Resolution & Parameter Guide

Nano Banana 2 generates natively at target resolution rather than upscaling, producing sharper text, cleaner edges, and better overall quality.

Resolution Selection

1K (Speed-Optimized)

- Best for: Social media, web content, rapid prototyping

- Use cases: Instagram posts, website images, concept validation

- Cost: Standard rate

2K (Balanced)

- Best for: Professional presentations, marketing materials, print-adjacent

- Use cases: LinkedIn posts, presentation decks, product mockups

- Cost: Moderate premium

4K (Maximum Quality)

- Best for: Large-format prints, billboards, commercial photography

- Use cases: Trade show graphics, magazine ads, premium content

- Cost: Premium rate

Parameter Framework

Aspect Ratio Guide:

- Social: Instagram feed (1:1), Stories/TikTok (9:16), YouTube (16:9)

- Marketing: Website heroes (21:9 or 16:9), email headers (16:9)

- Product: UI mockups (16:9), mobile screens (9:16)

Output Format:

- PNG: Text, UI mockups, transparency needs (larger files, no artifacts)

- JPEG: Photorealistic scenes without text (smaller files)

- WebP: Best for modern web (smaller than JPEG, better quality)

Batch Generation:

Use num_images to generate 2-4 variations for selection, then regenerate winners at higher resolution.

falMODEL APIs

The fastest, cheapest and most reliable way to run genAI models. 1 API, 100s of models

API Architecture and Implementation

Nano Banana 2 follows fal's standard asynchronous pattern, optimized for reasoning-guided synthesis.

Python Implementation

import fal_client

# Basic generation

result = fal_client.subscribe(

"fal-ai/nano-banana-pro",

arguments={

"prompt": "Modern coffee shop with 'OPEN' neon sign, warm lighting, customers at tables",

"aspect_ratio": "16:9",

"resolution": "2K",

"num_images": 1,

"output_format": "png"

}

)

# Access generated images

for image in result["images"]:

print(f"Generated: {image['url']}")

JavaScript Implementation

import { fal } from "@fal-ai/client";

const result = await fal.subscribe("fal-ai/nano-banana-pro", {

input: {

prompt:

"Product mockup: smartphone displaying 'NEW' notification, coffee cup beside it",

aspect_ratio: "1:1",

resolution: "2K",

output_format: "png",

},

onQueueUpdate: (update) => {

if (update.status === "IN_PROGRESS") {

console.log("Generation in progress...");

}

},

});

console.log("Generated:", result.images);

Production Pattern: Dynamic Model Selection

Not every prompt needs reasoning capabilities. Route intelligently:

def select_model(prompt: str) -> str:

"""Route to optimal model based on requirements"""

text_indicators = ["text reading", "sign", "label", "button", "UI"]

reasoning_indicators = ["equation", "diagram", "flowchart"]

prompt_lower = prompt.lower()

# Text or reasoning needs? Use Nano Banana 2

if any(indicator in prompt_lower for indicator in text_indicators + reasoning_indicators):

return "fal-ai/nano-banana-pro"

# Pure artistic? Use faster model

return "fal-ai/nano-banana"

# Usage

model = select_model("Coffee shop with 'OPEN' sign") # Returns nano-banana-pro

model = select_model("Sunset over mountains") # Returns nano-banana (faster)

Performance Considerations

Reasoning-guided synthesis requires more computation than pure diffusion, but the quality improvements justify the tradeoff for text-critical and complex use cases.

Generation Time Patterns

- 1K Resolution: Baseline (fastest for iteration)

- 2K Resolution: ~1.5-2x longer than 1K

- 4K Resolution: ~2-3x longer than 1K

The reasoning validation loop adds overhead, but eliminates manual post-processing and multiple regeneration attempts.

Cost Optimization

How do I optimize costs when generating high-resolution AI images?

Generate variations at 1K, select the best, then regenerate at target resolution:

# Step 1: Test at 1K (fast, cheap)

variations = fal_client.subscribe(

"fal-ai/nano-banana-pro",

arguments={

"prompt": "Logo: 'TECH CO' text, modern minimal",

"resolution": "1K",

"num_images": 4

}

)

# Step 2: Select winner, regenerate at 4K

final = fal_client.subscribe(

"fal-ai/nano-banana-pro",

arguments={

"prompt": refined_prompt, # Based on best variation

"resolution": "4K",

"num_images": 1

}

)

Future Roadmap

The reasoning-guided approach represents a fundamental shift in generative AI architecture. Expected developments:

Enhanced reasoning: Deeper logical validation enabling more complex scenes and improved technical content handling.

Faster inference: Optimization of the reasoning loop to reduce generation times while maintaining quality.

Extended modalities: The plan-evaluate-improve pattern could extend to video generation with logical consistency across frames.

Fine-tuning support: Domain-specific reasoning models for specialized content (medical diagrams, architectural renderings, legal documents).

The convergence of perfect text rendering, visual reasoning, and native 4K generation creates unprecedented opportunities:

- Content Automation: Scale production for social media and marketing

- Creative Tools: Integrate AI into existing workflows

- Educational Content: Generate accurate diagrams and technical materials

- Rapid Prototyping: Test concepts before production

Getting Started

Nano Banana 2 is available now through fal, bringing reasoning-guided image synthesis to production applications with enterprise-grade infrastructure.

Integration Paths

Direct API Access: For maximum control and customization

Platform Integration: Through fal for rapid deployment

Client Libraries:

pip install fal-client # Python

npm install @fal-ai/client # JavaScript

![Realtime generation with FLUX.2 [klein] from Black Forest Labs.](/_next/image?url=https%3A%2F%2Fv3b.fal.media%2Ffiles%2Fb%2F0a8d5092%2FvaTm5if3zW-sNx3VgjI2T_2b97424cac3e4f62bebb30ddf1aa1d4b.jpg&w=3840&q=75)